Viewing Conversations

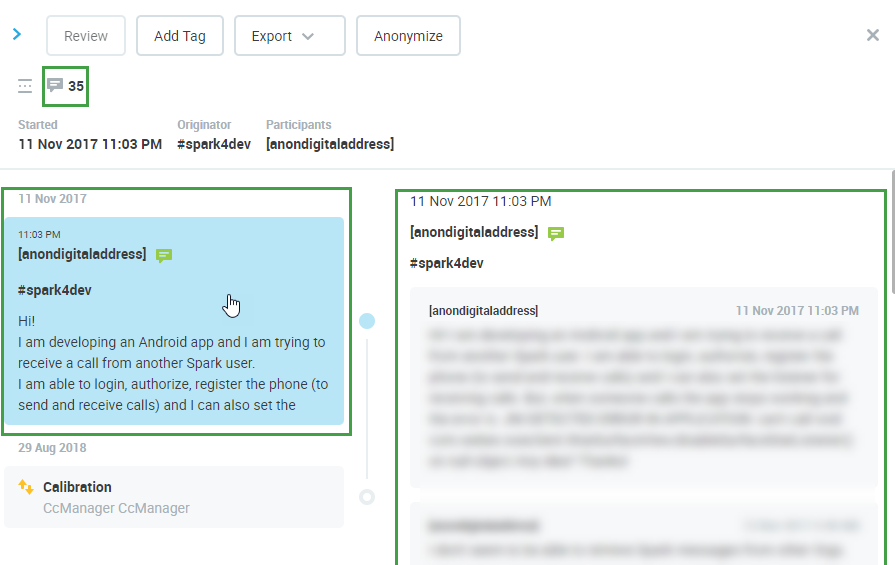

Conversations include recorded calls and screen conversations as well as emails and chats. Currently, we support the integration of emails from Salesforce.

Selecting a conversation row shows the conversation preview on the right of the screen. The preview shows the key information about the conversation, including, the direction, type, calling parties, conversation segments, review status, flags or comments, and summaries of the recording generated by the Eleveo Generative AI integration (installation dependent).

.png?cb=bdd960cd2cc6197952e1b390190d7a29)

To see more detailed information about the selected conversation, click the expand arrow showing the details.

Conversation Explorer will display the selected Conversation Details for all its segments (calls, chats and emails) including, if available, Review details such as the Questionnaire name, Reviewer, Reviewee, score, and technical information such as Correlation IDs (SID).

Results of Reviews

The results of Reviews connected to conversations are displayed within the Preview Pane to users with the appropriate permissions. Results are not visible to agents from within the conversation screen, even if the Reveal Results to Agent option is selected when creating the review.

Additional information is provided in the Detail Pane.

Detail Pane

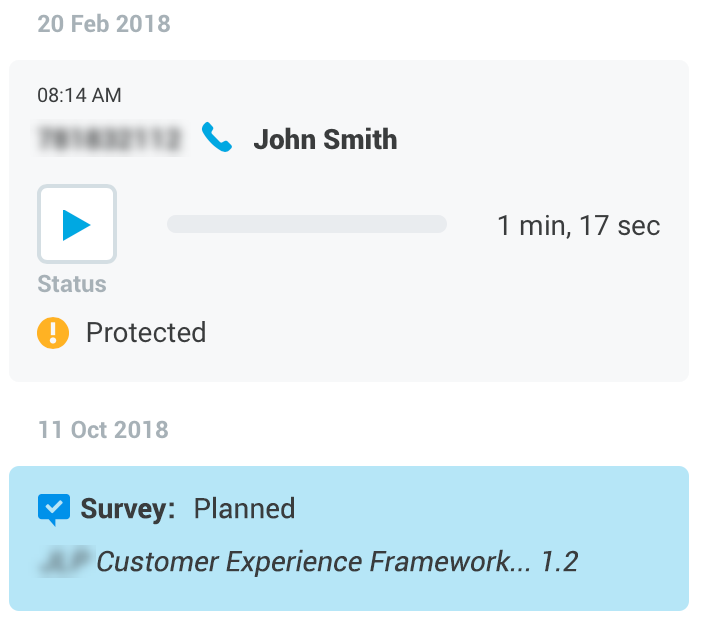

Click an item in the Preview Pane to expand the Detail Pane. Click on the info icon to view details.

Select an object to view detailed information related to it. The information displayed will vary based on what is selected, the content available is installation-dependent:

To view detailed information about the selected segment/conversation when the Details Pane is open, click to select an object.

Functionality may vary based on your deployment and licensed/installed features:

-

The permission

AI_Outputs_Viewis required in order to view the Summary, Topics, and Flags. -

An additional permission

AI_RATING_VIEW) is required in order to view the AI Rating in the UI.

Note: Very short recordings (where the transcription file contains five words or less) are not processed by the Speech Generative AI server and, therefore, will not have an AI rating, Summary, Topics, or Flags displayed.

Available Options

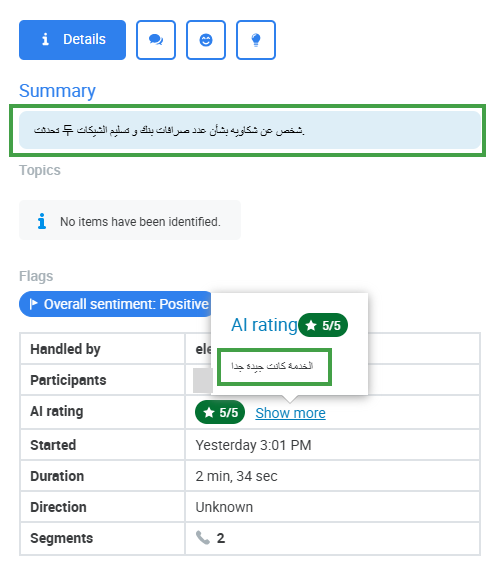

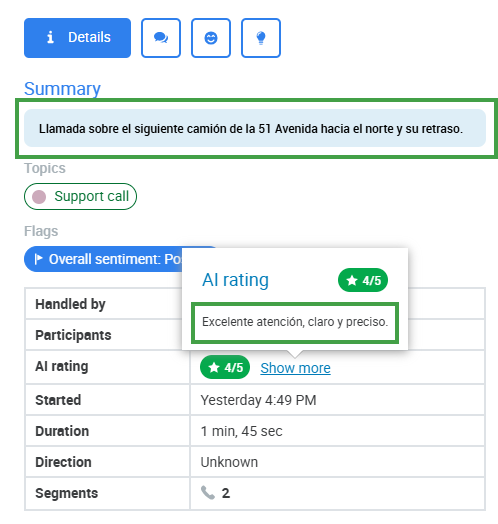

Summary, Topics, Flags, and the AI Rating(+Reason for the Score) are provided in one of the Supported Languages.

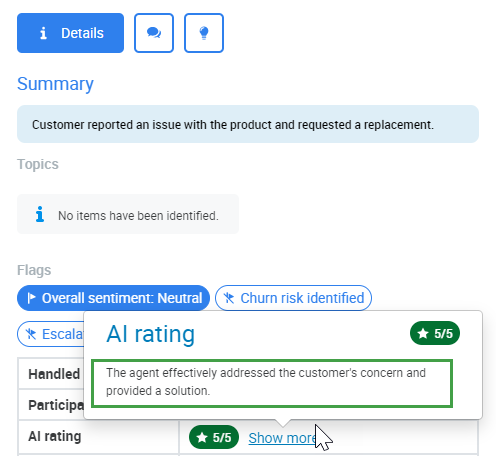

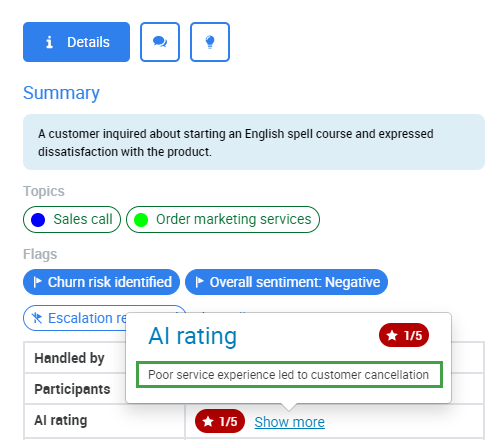

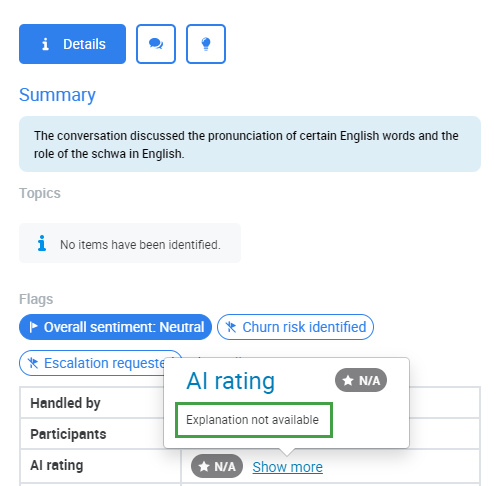

Summary

A brief summary of the conversation (up to 300 characters) generated by the Speech Generative AI service will be displayed (if available).

Speakers in the conversation are identified as Participant 1, or Participant 2 if the summary would otherwise contain a name or other identifying information.

An example summary: “Participant 1 stated that they have a technical issue with the product. …..”

Topics

If available, topics detected by the Speech Generative AI service will be displayed. If the system does not provide information for a single conversation, the message No items have been identified will display.

Topics are created by an administrator and then the system analyzes each new conversation for topics that match. Only the first three (3) topics detected are displayed in the Details Panes of the conversation explorer.

Example topics - Each industry will have different topics configured.

Flags

Localized according to User settings

The overall sentiment of the conversation is shown using a predefined flag(s). Relevant flags are highlighted (in blue) if detected. If not detected the flags are not highlighted nor shown by default. Click Show all to view all available options. Note: Flags are shown localized in the language set for the current user, in the User Profile.

-

Default flags include: Overall sentiment(Positive, Neutral, Negative), Churn risk identified, Escalation requested, Repeat contact.

General Information

General information about the entire conversation:

-

Handled By – Name or number of the agent (if detected)

-

Participants – Number of the participants (customer/agent if detected)

-

AI rating – The Speech Generative AI server is asked to evaluate every conversation based on the following question: Can you evaluate quality of service provided in this conversation with a score from 1 to 5 where 5 is the highest. The resulting score is visible to users with the appropriate privileges (

AI_RATING_VIEW). Click on Show more to view a brief explanation for the rating provided. -

Started – Time and date the conversation began

-

Duration – length of the conversation

-

Direction – Inbound, Outbound, Unknown

-

Segments – number/type of segments

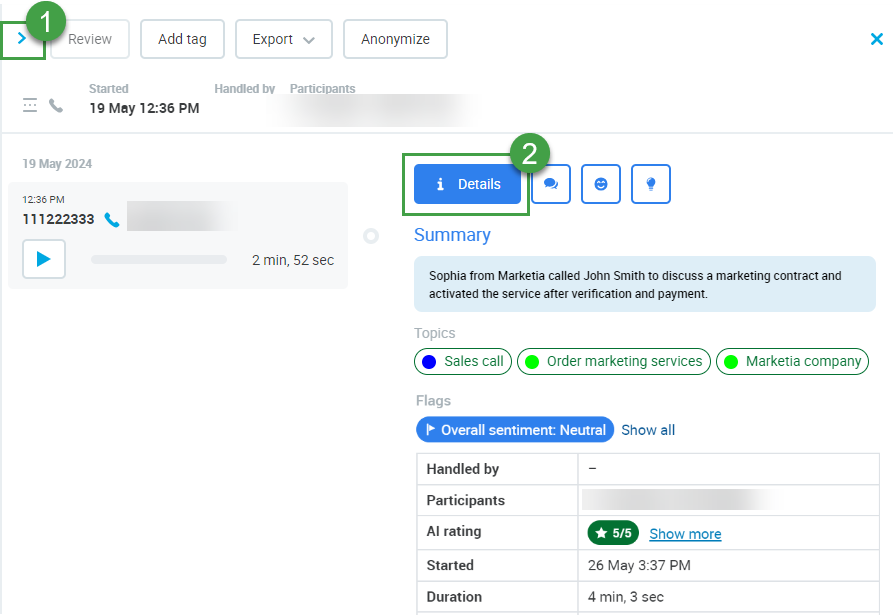

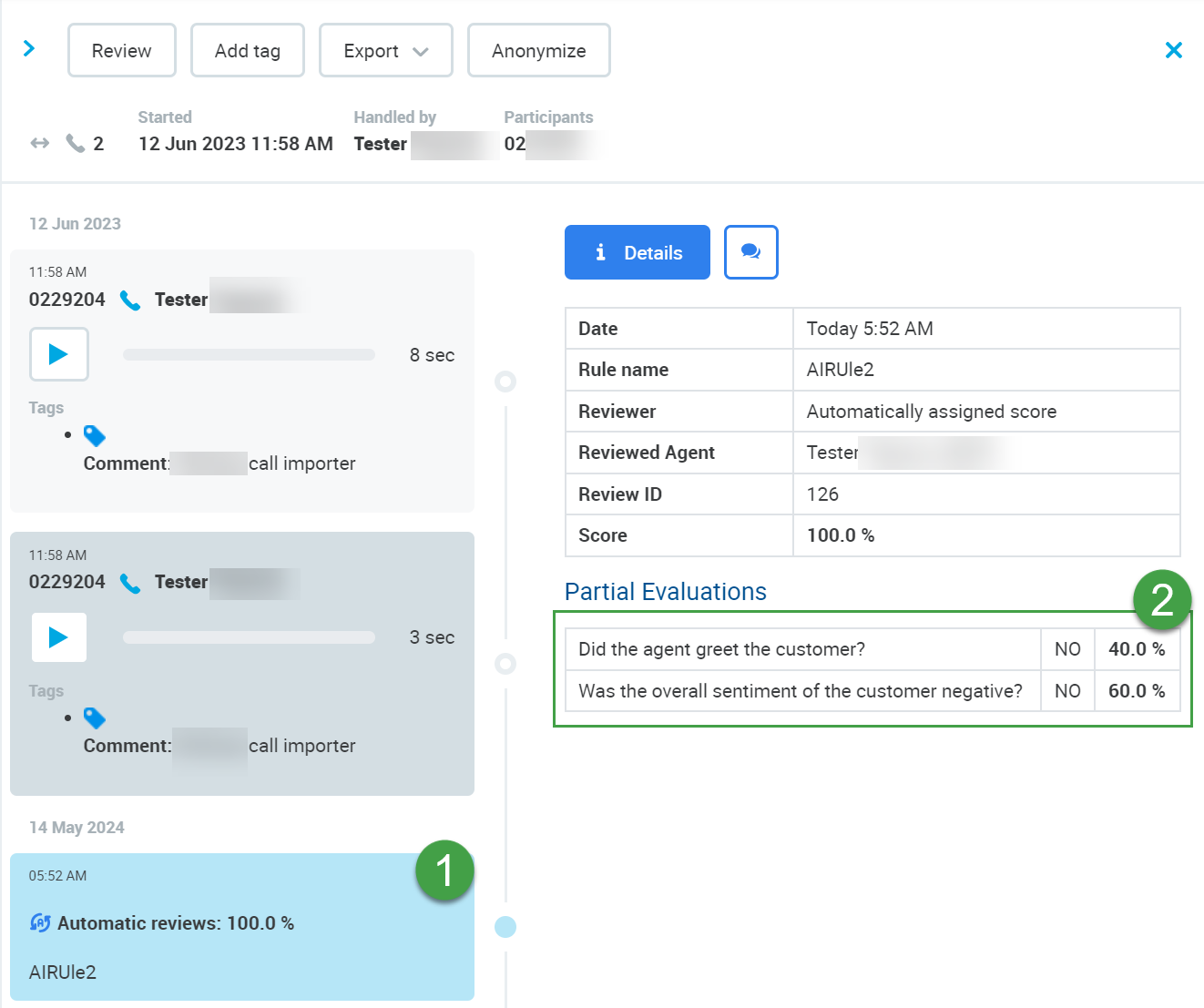

Automatic Review Score (AQM)

The score assigned to a conversation by the user-defined Automated Rules is displayed in a dedicated column on the Conversation Explorer and also within the Preview Pane (1) to users with the appropriate permissions.

Additional information is provided in the Detail Pane (2).

Click an item in the Preview Pane to expand the Detail Pane.

The following information is listed: (Additional information may be displayed, installation-dependent)

-

Date: Creation date.

-

Rule Name: Name of the Rule used

-

Reviewer: Automatically assigned score

-

Reviewed Agent: Name of the agent

-

Review ID: ID number

-

Score: Total score assigned by the Automated Rule

-

Partial Evaluations: A detailed breakdown of how the score was calculated is displayed

If Speech Generative AI is deployed the following Information is displayed :-

The question used in the Automated Rule: Text only

-

The result provided by the Speech Generative AI server: Possible responses include

YES/NO/N/A -

Calculated score: This is the score assigned based on your configured Automated Rule for each question

-

Results of Automatic Rules with Generative AI

If Speech Generative AI is installed (and used as part of an Automated Rule) the results are displayed in the details pane. In the image below you can see that the name of the rule and overall score is displayed in the details pane (#1). The questions defined in Automated Rules and the responses to those questions provided by the Speech Generative AI server are listed as part of the Partial Evaluations list (#2).

Results of Manual Evaluations

-

Date – Creation date.

-

Questionnaire Name – Name of the Questionnaire used

-

Questionnaire version – Version of the Questionnaire used

-

Questionnaire ID – ID of the Questionnaire

-

Reviewer – Name of the reviewer

-

Reviewed Agent – Name of the agent

-

Review ID – ID number

-

Score – Total score

-

Reason for the Score – The comment (if it exists) is displayed

Status

-

Additional system information

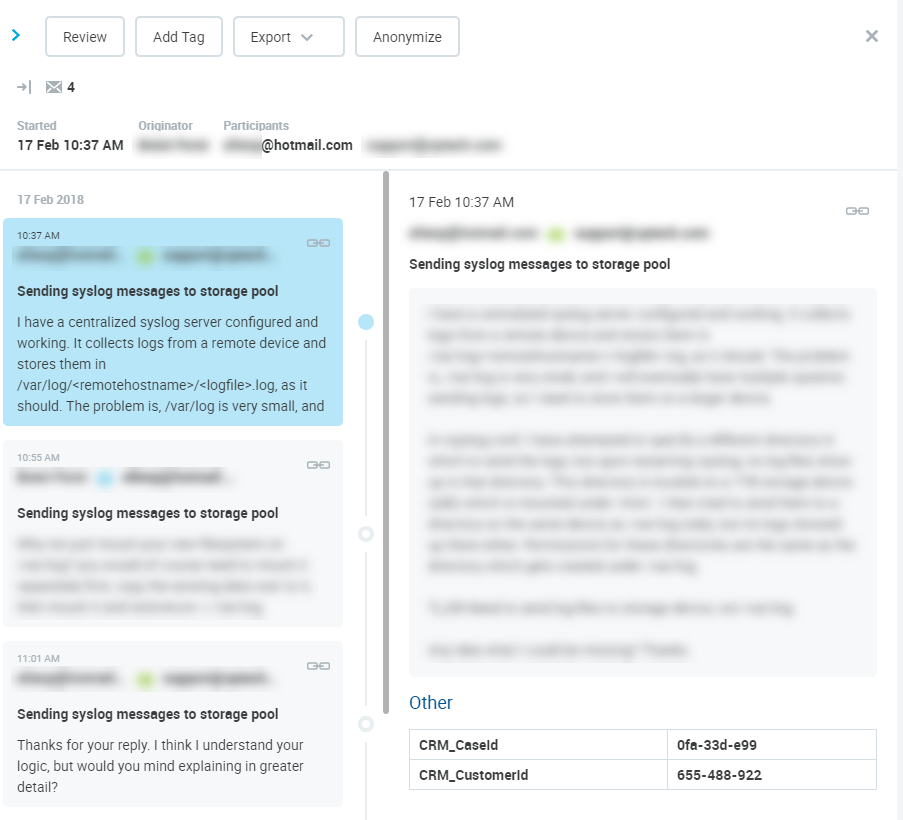

Custom /Other Data

-

Installation-dependent technical information is displayed

Technical Information

-

Conversation ID / Correlation ID's (SID) – Please note that the correlation ID is only visible within the Conversation Detail pane if the media is selected

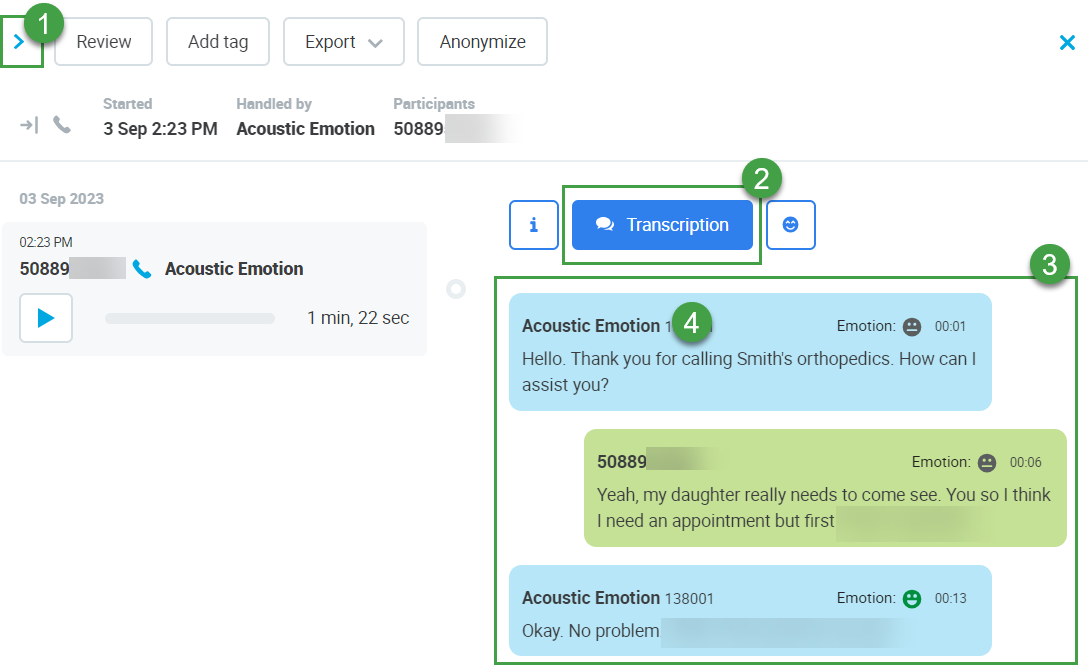

Transcription

multilingual

Any conversation that contains a transcription is viewable on the conversation explorer, even if the audio file is not available. This means that if the audio file is missing, for any reason, the transcription is still available and shown as an item on the list of available conversations until is it deleted based on a Retention Policy.

-

Click an item in the Preview Pane to expand the Detail Pane.

-

Click on the Transcription Icon to view the transcription.

-

The emotion is shown for each transcription utterance (sentence). The emotion detection feature combines acoustic features and word sentiment scores to determine the emotion of each individual sentence. This is installation-dependent and is not supported for all languages.

-

If the system is able to detect the participant their name is shown for each transcribed phrase. If the system cannot detect who the speaker is, then a phone number will be displayed.

Background noise and other sounds are not included in the transcript. Only words/phrases are included in the transcription.

The five levels of emotion displayed for each utterance are as follows:

|

Icon |

Emotion |

|---|---|

|

Mostly Positive |

|

|

Positive |

|

|

Neutral |

|

|

Negative |

|

Mostly Negative |

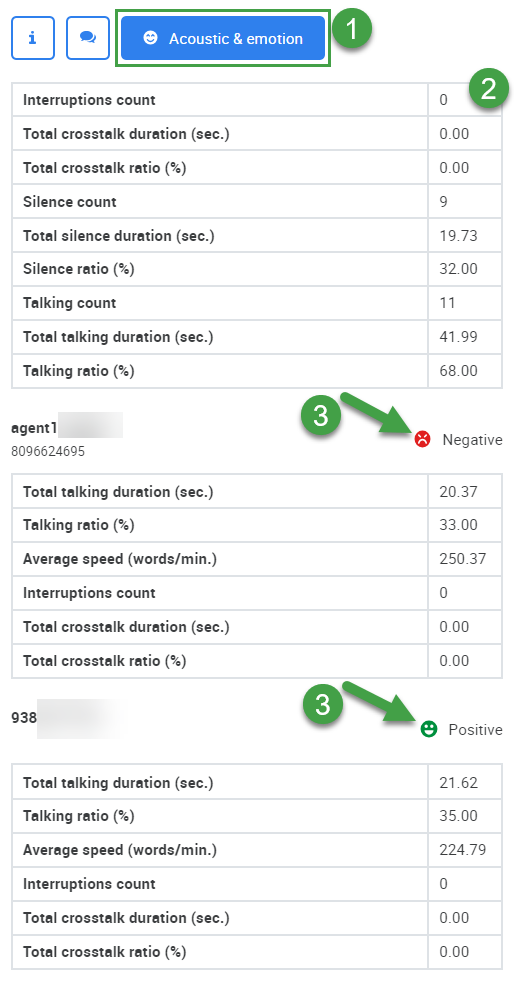

Emotion and Acoustic Parameters

This feature is only supported for selected languages.

Click an item in the Preview Pane to expand the Detail Pane.

-

Click on the Emoticon Icon to view the Emotion and acoustic parameters.

-

The list of available data will be displayed.

Data available may include:

-

General statistics – Aggregated for the entire conversation

-

Interruptions count – Number of interruptions

-

Total crosstalk duration (sec.) – Total time that the speakers were interrupting or speaking over each other

-

Total crosstalk ratio (%) – Ratio of time that the speakers were interrupting or speaking over each other

-

Silence count – Silence count includes all silences that are greater in length than 800 milliseconds. This means that the silence count may be 0. In contrast, Total silence duration might be greater than 0 as it combines all silence time, even short periods of silence.

-

Total silence duration (sec.) – How much time was silent (no audio)

-

Silence ratio (%) – Ratio of time that was silent relative to talk time

-

Talking count – Total count of utterances (i.e. phrases, sentences in the transcription)

-

Total talking duration (sec.) – Total time a participant was speaking

-

Talking ratio (%) – How much time (as a ratio) a participant was speaking

-

-

Speaker specific statistics

-

Gender (Male/Female) – If detected the system displays the gender of the speaker (this information is not displayed unless configured by an administrator)

-

Total talking duration (sec.) – Total time the participant was speaking

-

Talking ratio (%) – How much time (as a ratio) the participant was speaking

-

Average speed (words/min.) – How fast the speaker was speaking. Average number of words per minute (rounded to 2 decimal places)

-

Interruptions count – Number of interruptions (times the speakers spoke over each other)

-

Total crosstalk duration (sec.) – Total time that the speaker was interrupting or speaking over the other

-

Total crosstalk ratio (%) – Ratio of time that the speaker was interrupting or speaking over the other

-

Average talk speed – Average number of words spoken per minute

-

Agent talking ratio – Ratio of the call, in percent, where the agent is speaking

-

Agent crosstalk ratio – Ratio of the call, in percent, where there is crosstalk

-

Agent number of interruptions – Number of times crosstalk is detected

-

-

The emotion of each participant is shown.

-

The emotion shown can be improving, positive, neutral, negative or worsening. This is the overall emotion (of one party) during the last segment they were participating in. E.g. This is the emotion of the speaker when they left the call.

The five levels of emotion displayed by each of the participants when they leave the conversation are as follows:

|

Icon |

Emotion |

|---|---|

|

Improving |

|

Positive |

|

Neutral |

|

Negative |

|

Worsening |

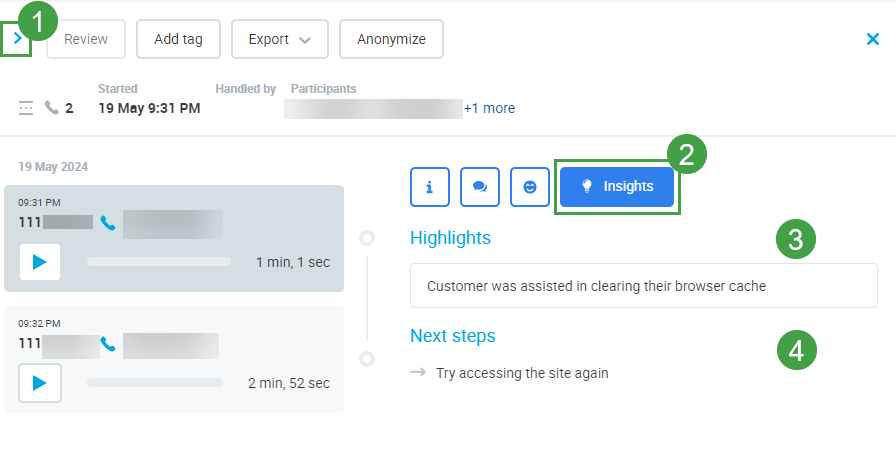

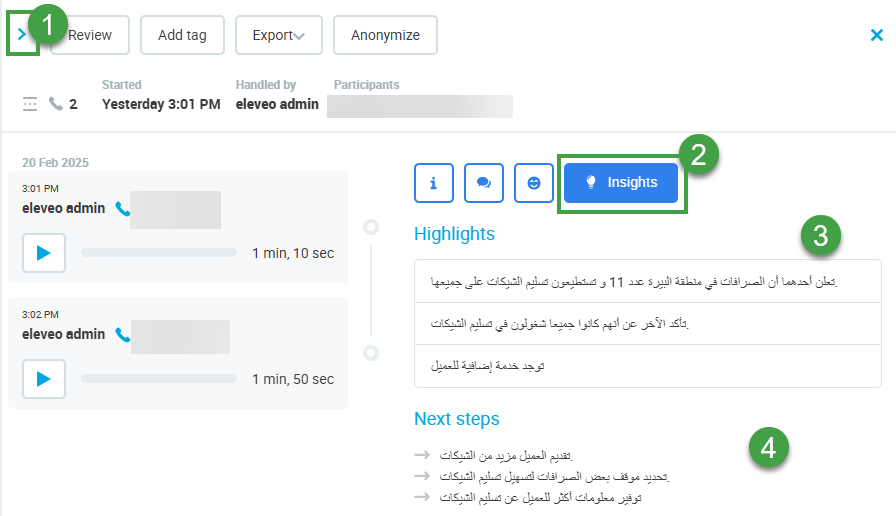

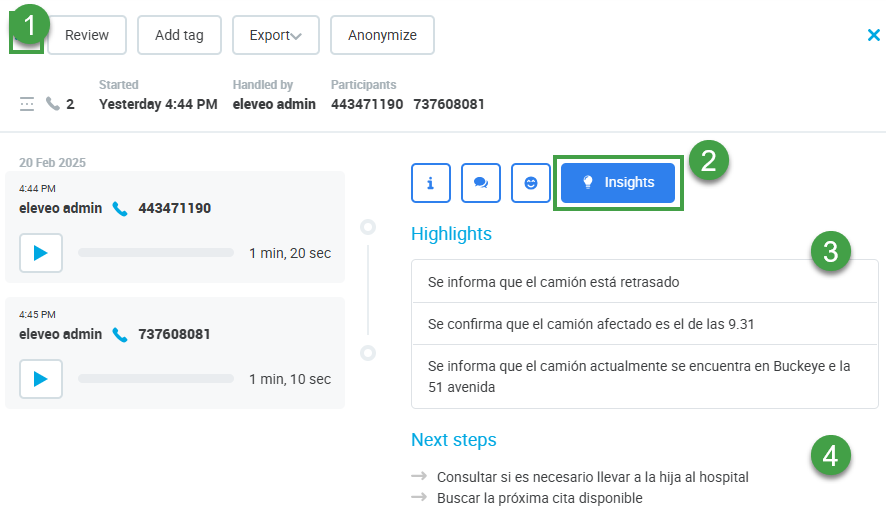

Insights

This feature is only supported for installations with Speech Generative AI. In order to be processed, transcriptions for the conversation must be available, and at least one party to the conversation must have the appropriate permission assigned (AI_OUTPUTS_VIEW).

multilingual

-

Click an item in the Preview Pane to expand the Detail Pane.

-

Click on the Insights Icon to view more details

-

Highlights – Key points taken from the conversation are listed, this is not a summary but important topics/events in the conversation.

-

Next steps – The system lists key points requiring follow-up or recommendations made.

An example of Insights in other supported languages:

Chats / Emails

Chat conversations will be grouped together as shown below until the occurrence of another segment type such as call or email.

In the case of emails, all details such as sender, sent date, recipients and subject will be shown. Click each conversation segment in the thread to see it expanded on the right.

Viewing the content

If an agent switches teams, who can view their conversations?

If an agent switches between teams at any time, the previous Supervisor will be able to view conversations from before the team change, the new Supervisor will be able to view conversations from after the team change. However, due to the way that conversations are indexed by the server if a conversation is updated (For instance, a tag is added to a conversation from the Quality Management UI, etc,) the conversations will become visible to the new Supervisor because the Agent is assigned to that supervisor at the time the conversation is updated! The conversation will no longer be visible to Supervisor A.

Case Example: An agent works for Team A until the end of May and then is moved to Team B from the 1st of June. Supervisor A (from team A) can see all conversations that were done by the agent until the end of May. Supervisor B (from Team B) can view only those calls that are made from the 1st of June. However, if the conversation created in May is updated (tag is added by Supervisor A during a routine review) then it will be visible to Supervisor B, as the agent is a member of Team B at the moment the conversation is updated.

Viewing conversations involving multiple teams (transfers, email chains, or conference calls)

If a Supervisor or Team Leader can view a part of a conversation (because it was done by an agent from their team) then they can view the whole conversation (even the parts that were done by agents from different teams)!

.png?cb=33c46699a2ee89749e666a0bf07915ac)