Automated Quality Management (AQM)

This feature requires a dedicated license file. Users must be granted the appropriate license–based effective role in order to access the functionality provided. Please review the instructions on Using Eleveo Specific Roles to ensure that the appropriate license–based roles are added to Eleveo-specific roles.

Users must have the following role assigned by an administrator: AUTOMATED_RULES_MANAGE

NOTE: The system evaluates conversations that are matched to agents. If no automated score is assigned to a conversation, it is possible that the conversation has not been correctly matched to a user (agent).

Generative AI: Some functionality depends on connectivity with a third server that analyzes transcriptions. This feature set is licensed separately. At least one party to the conversation must have the role AI_PROCESSING_ENABLED assigned in order for Generative AI to work with Automated Rules. Automated rules will display options related to AI-based questions even if the required server is not installed.

Automated Rules

Automated Quality Management (AQM) is able to automatically evaluate all call-based conversations available within the Quality Management application. The Automated Rule builder allows you to create rules that assess all conversations (or a subset of conversations) and assign a score to individual conversations. Multiple rules and their final score can be used to gain better insight into your contact center operations. Results are displayed directly within the Conversation Explorer, you may then search for Conversations based on their score. Automated Rules help to find underperforming agents, outstanding agent performance, questionable interactions, or unexpected issues that may otherwise go unnoticed in the contact center.

Use Automated Quality Management (AQM) to:

-

Find specific (problematic) conversations and better understand the needs of customers.

-

Focus on a lack of expertise in key areas within your contact center teams.

-

Provide feedback and guidance to agents.

-

Assess agent performance or adherence to specific requirements.

Information available is installation dependent

Some information used by Automated Quality Management is dependent on the specific installation and integrations connected to your Quality Management server. For example, each speech engine provides slightly different outputs, which impact the data available for Automated Quality Management. Generative AI is another example of an installation specific feature set.

Specifically, not all speech engines provide emotion and acoustic parameters. If your installation does not display emotion related information this is most likely the reason why.

How do Automated Rules work?

The Eleveo server evaluates conversations based on the Automated Rules configured by the user. Conversations are assessed on a regular basis, with the evaluation results displayed in the Conversation Explorer. Users can search for conversations based on the score assigned by the Automated Rules or by the rule name itself.

What conversations are evaluated? How often are they evaluated?

By default, the backend service will evaluate new (and recent) conversations on an hourly basis. Any conversation from within the past 3 days (by default) will be assessed.

Specifically, any conversation will be evaluated if it meets the following conditions:

-

Occurred within the last 3 days (the default setting may be modified by your administrator)

-

Occurred at least one hour ago (this is configurable as a 'delay period')

-

Matches the conditions set by the user when creating an automated rule (metadata, voice, etc.)

-

Has not yet been evaluated by this specific automated rule

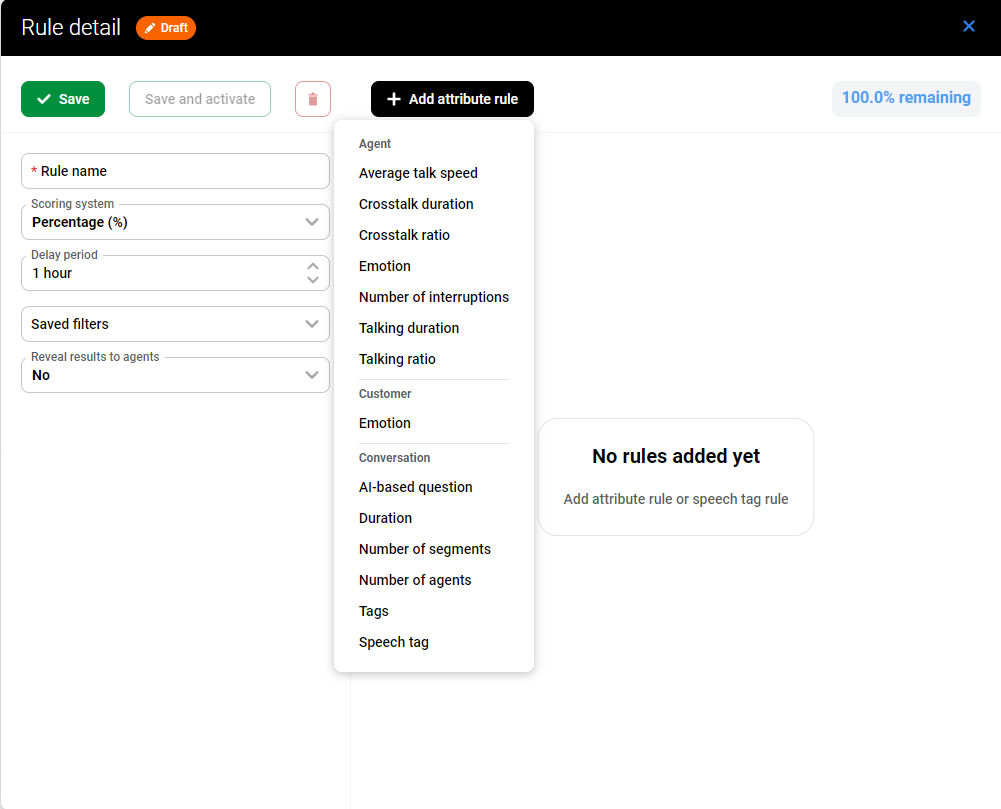

What attributes can be used when creating Automated Rules?

The system calculates an Automated Score based on standard metadata associated with a conversation. Supported metrics for the calculation include(installation dependent):

-

Standard metadata – Available options may vary based on the data available within your specific installation.

-

Agent-related attributes (impact specific agents score only) (detected by the Speech Engine)

-

Average talk speed

-

Agent crosstalk duration

-

Agent crosstalk ratio

-

Agent emotion

-

Agent number of interruptions

-

Agent talking duration

-

Agent talking ratio

-

-

Customer (impacts the score of each agent within the conversation) (detected by the Speech Engine)

-

Customer Emotion

-

-

Conversation level attributes (impacts the score of each agent within the conversation)

-

AI-based question – (installation dependent- requires a license for Generative AI, and also transcriptions must be enabled)

-

Duration – time (number)

-

Number of segments

-

Number of agents

-

Tags – predefined (dropdown) list of tags - These are tags created by an administrator/manager.

-

Speech Tags – (if available- installation dependent)

-

-

Where are the scores displayed?

The score is displayed in a dedicated column on the Conversation Explorer screen. Additional details are visible within the Details pane.

Can Automated Rules be shared between managers?

Any user who has the role AUTOMATED_RULES_MANAGE can view and modify rules. The score is visible to all users.

Can a conversation be evaluated multiple times?

Yes. The Automated Rules are applied to a conversation only once for EACH specific rule. But each conversation can be scored by multiple rules based on the use of saved filters, and individual agents will be evaluated separately. Results are displayed within the details pane.

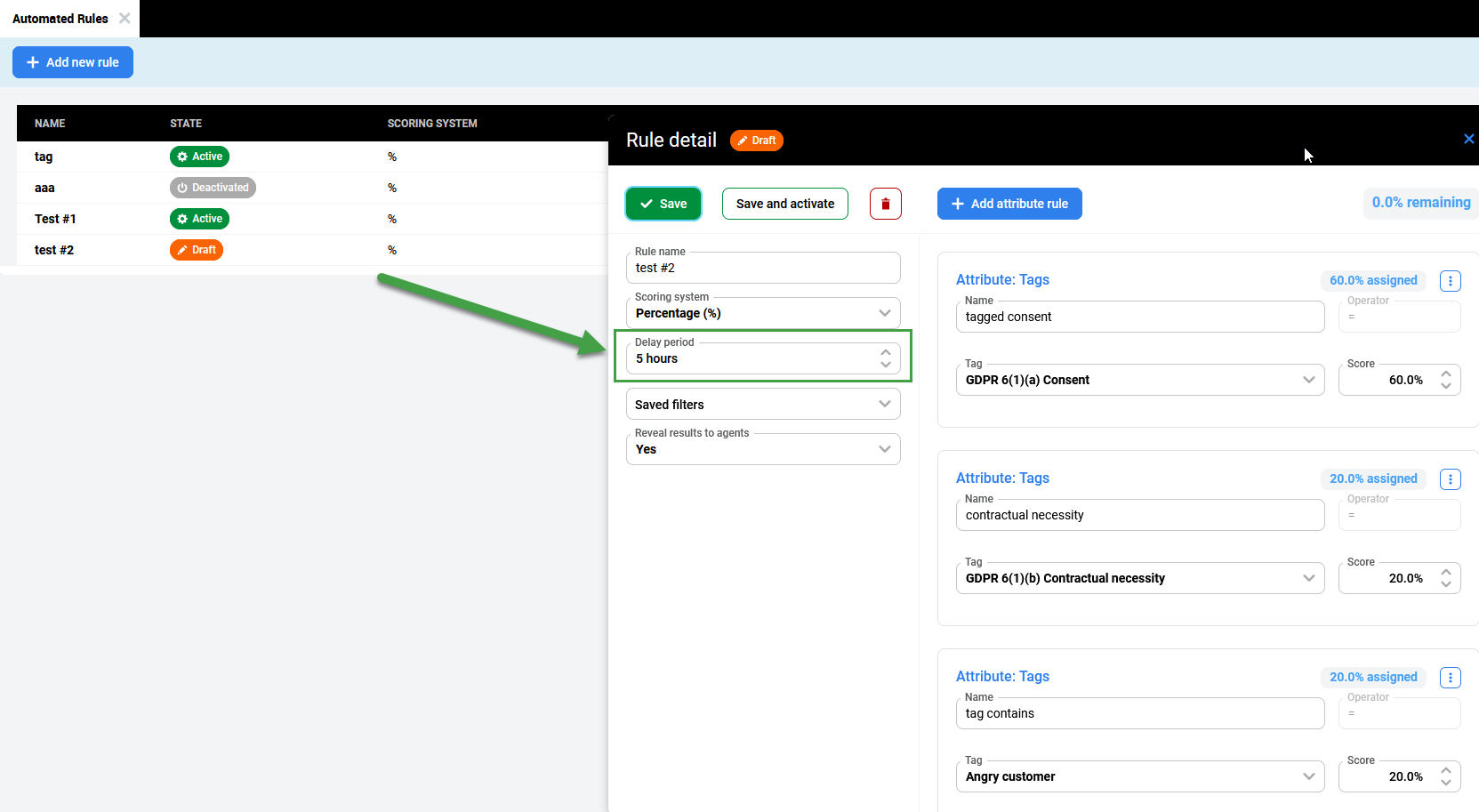

Delay period

When creating an Automated Rule the delay period is set and specifies the minimum time period that the system will wait before scanning a conversation and assigning it a score. This delay is necessary to ensure that all relevant data has time to be processed after being imported into the system.

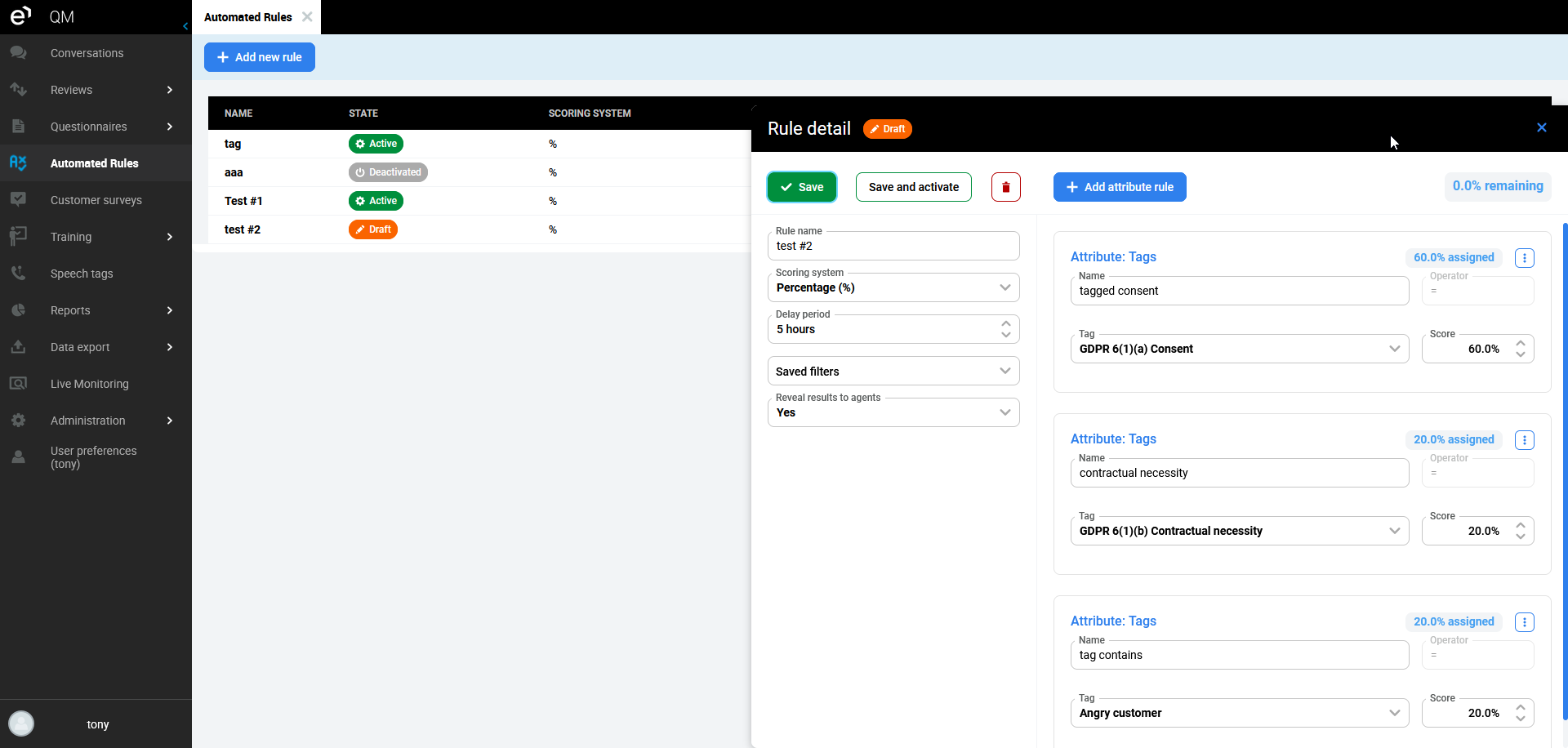

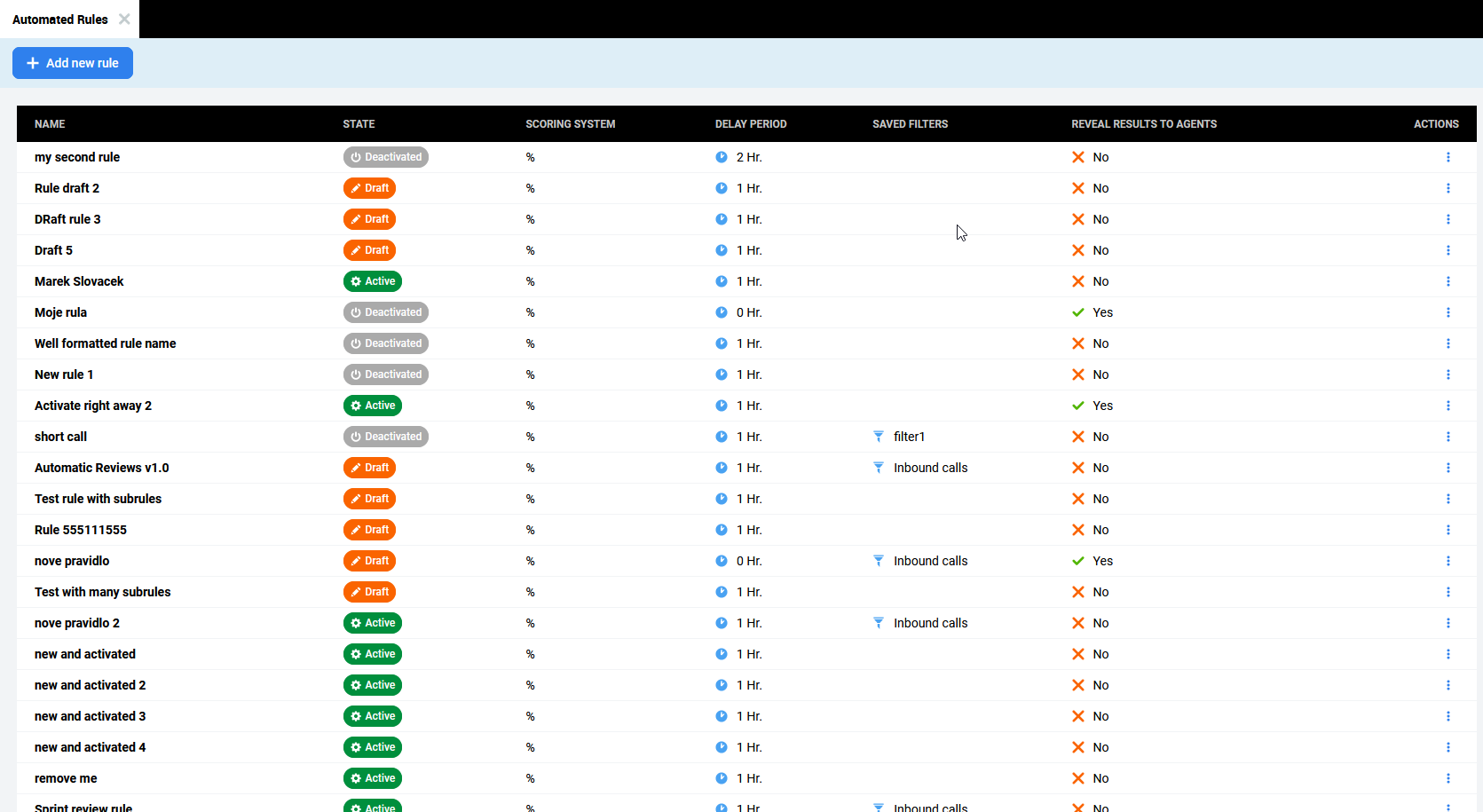

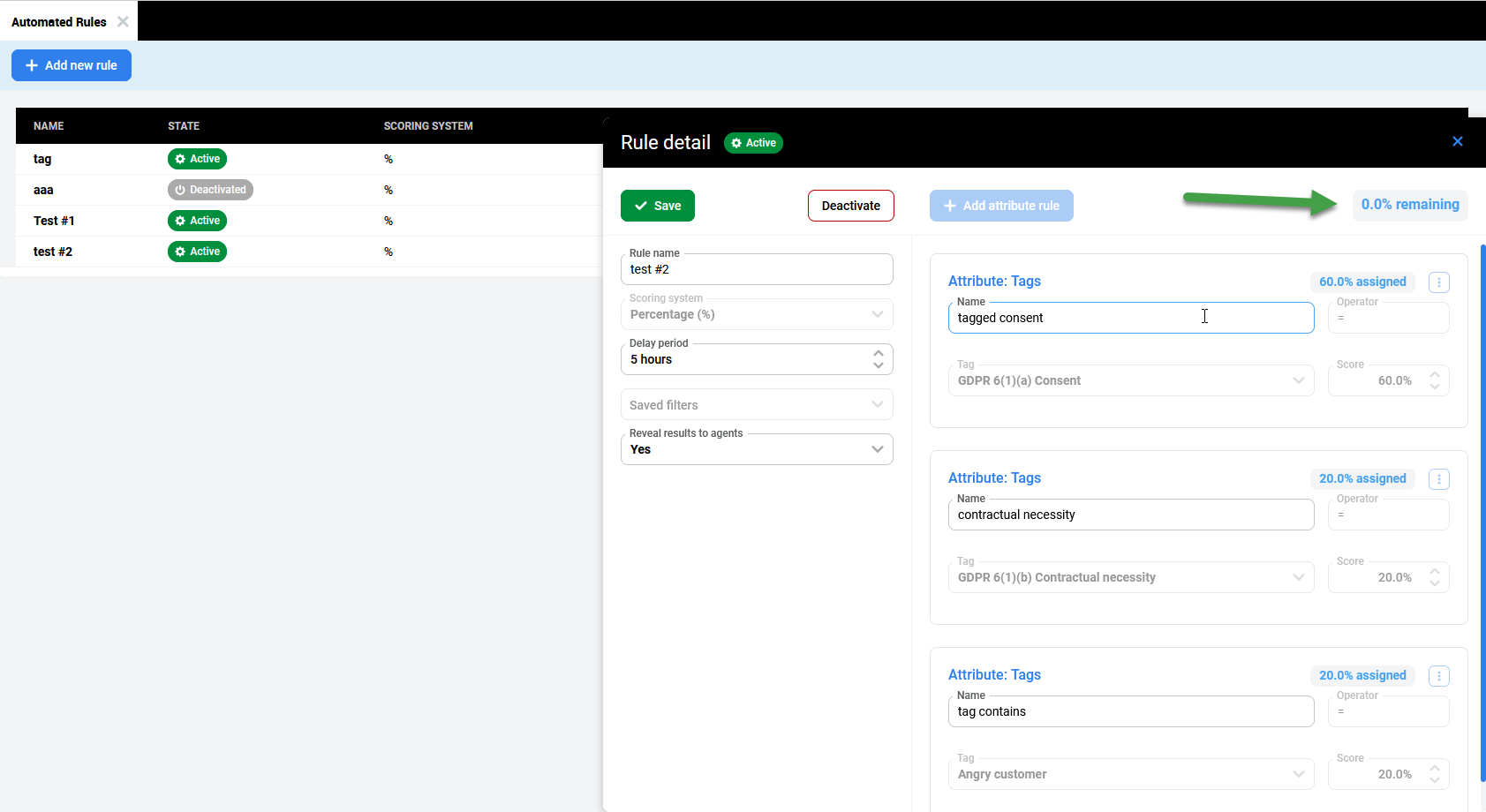

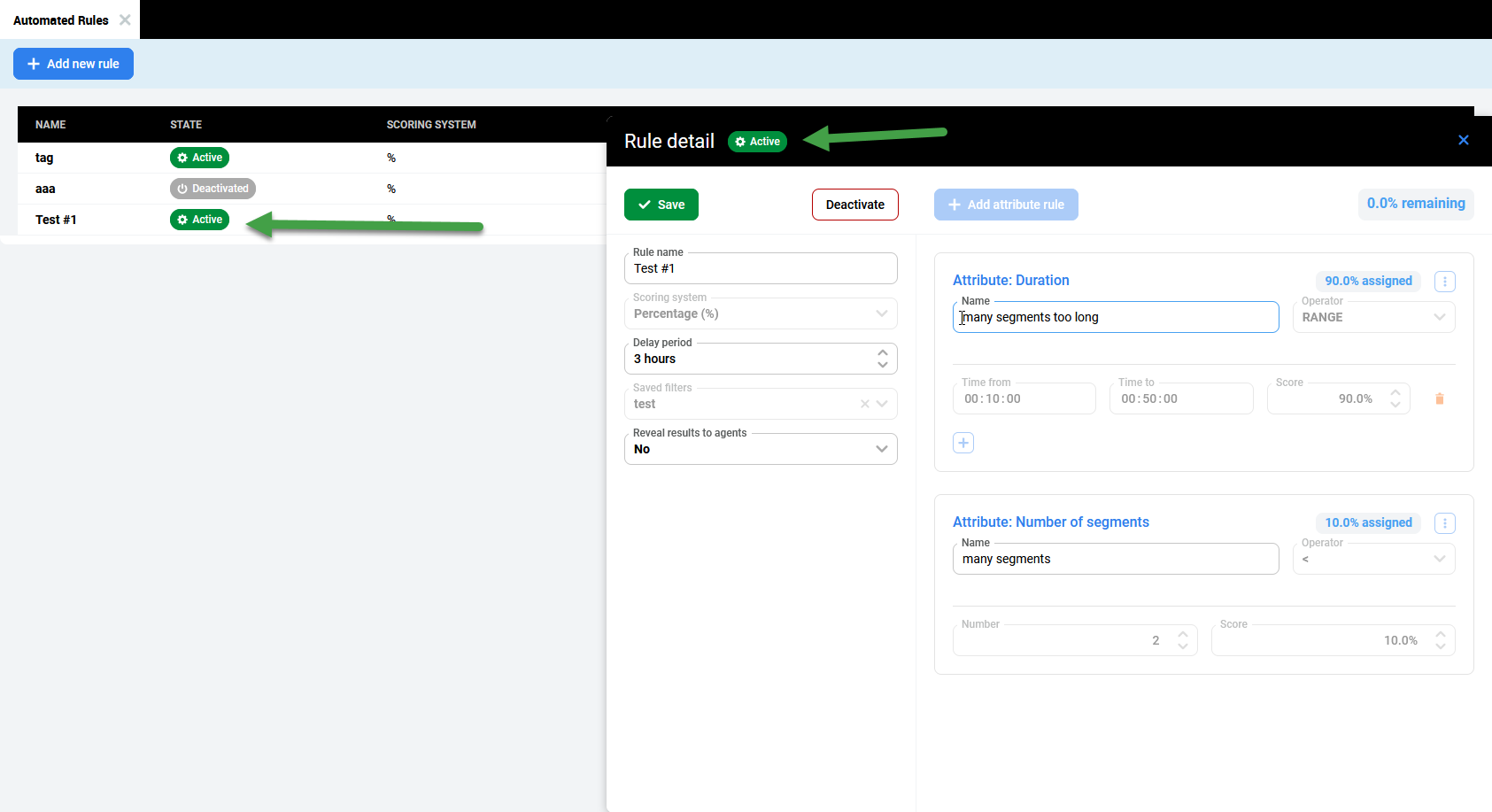

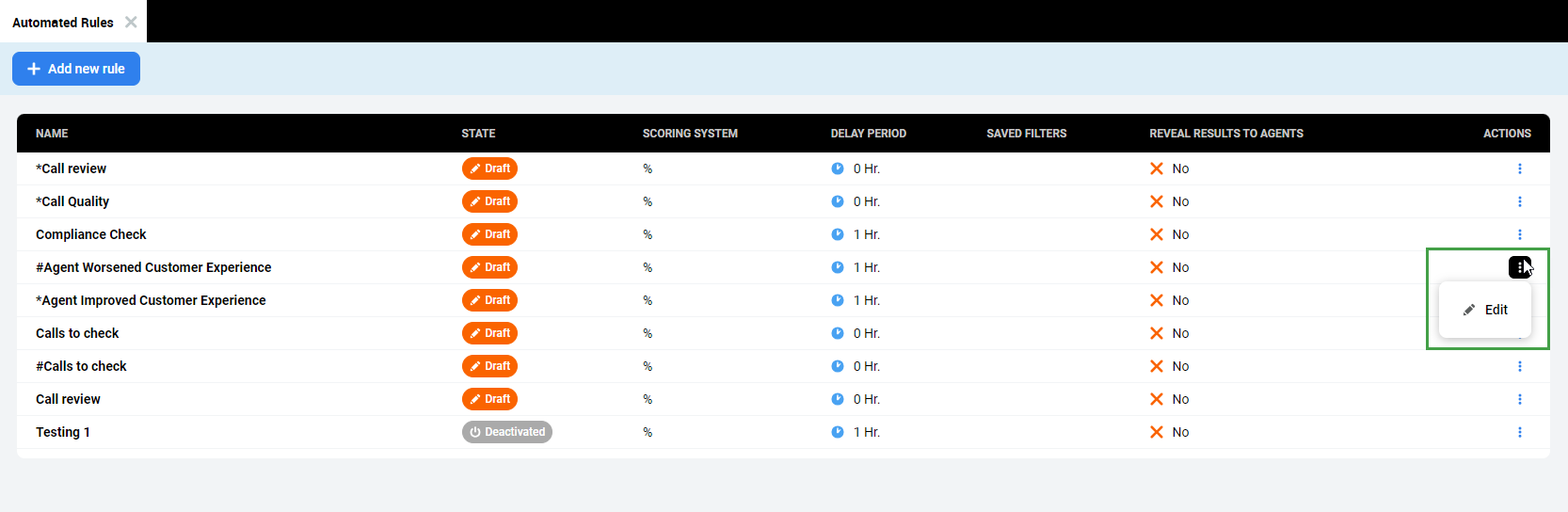

View Automated Rules

The Automated Rules tab displays a list of all Automated Rules. The following information is displayed by default.

-

Name – User-defined name of the rule

-

State – The current state of the rule. Rules can be in one of the following states: Active, Draft, or Deactivated.

-

Scoring system – User-defined scoring system used when calculating the results (only % is available in this version)

-

Delay Period – User-defined delay. This affects how often the system scans and calculates results. Note that creating many filters to run every hour may impact performance.

-

Saved Filters – User-defined filters that define which conversations are included when calculating results.

Use filters to focus the rule on a specific subset of conversations. Failure to use a filter will lead to all conversations being evaluated! This may clutter the details pane.

Filter not visible?

To access a filter that isn't appearing in the list edit the filter and temporarily add a “1” in front of the filter name. This will make it appear in the dropdown menu. Once it's visible and selected, you can remove the "1" to return it to its proper alphabetical position.

-

Reveal Results to Agents – Indicates if the result will be visible to agents

All results are displayed to all users in this version. We are actively working to enable this feature.

-

Actions – Click to view more options.

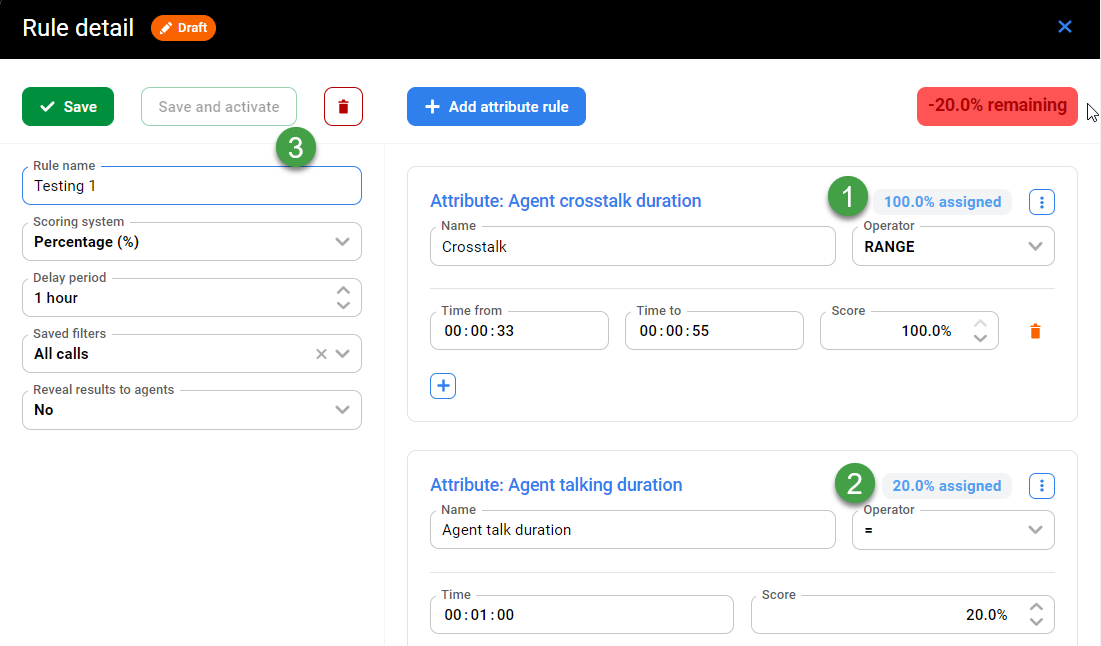

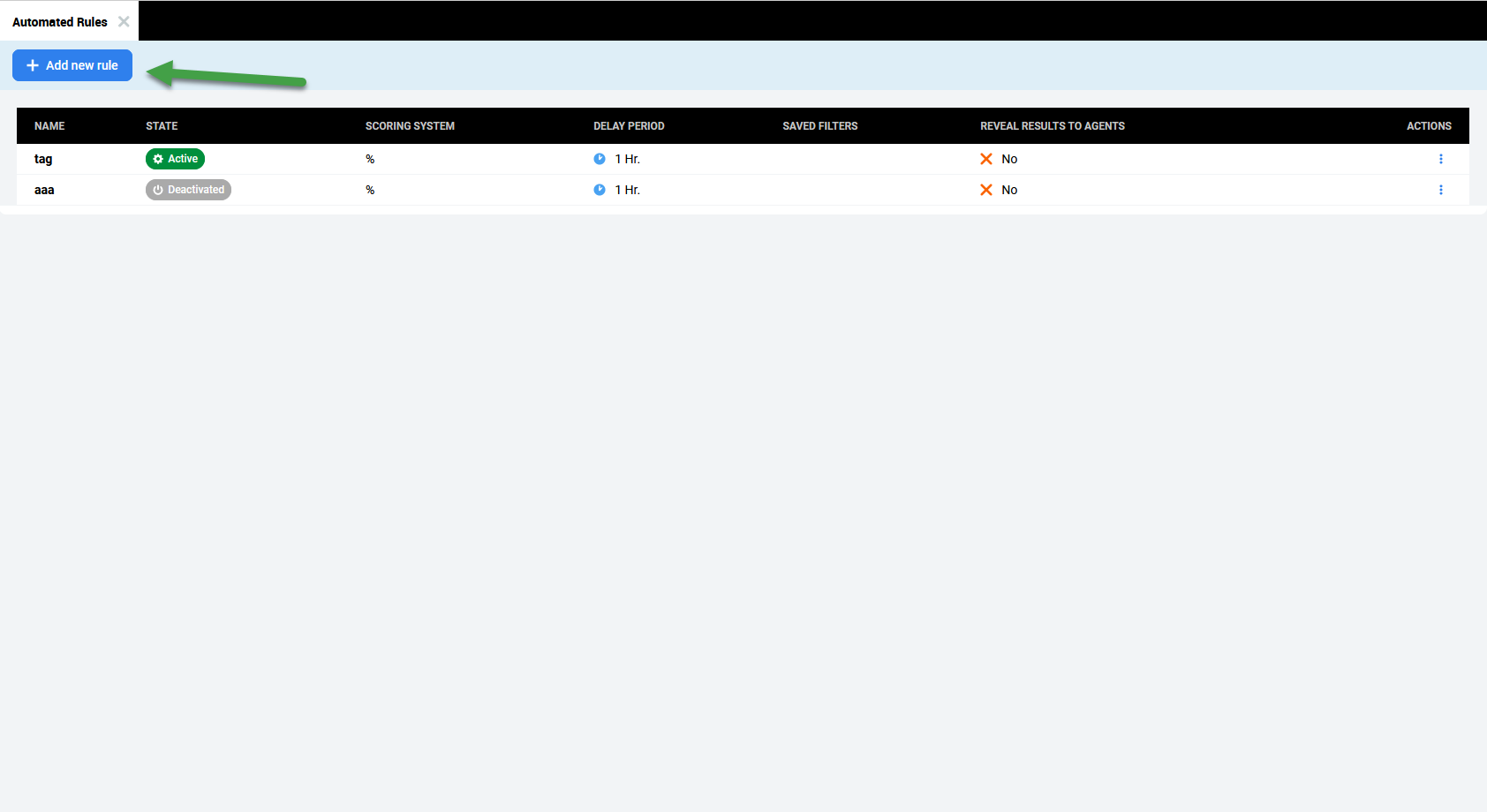

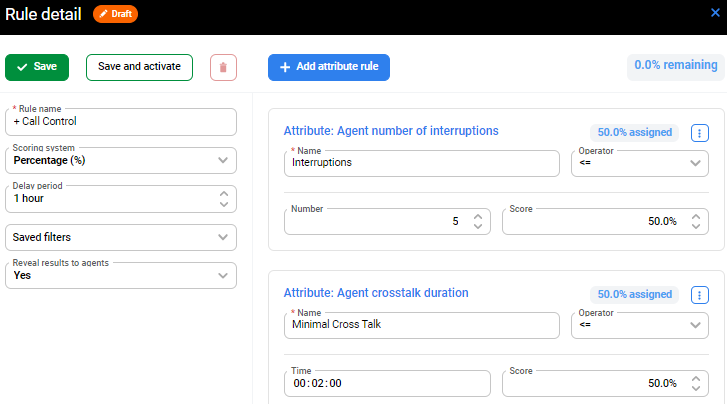

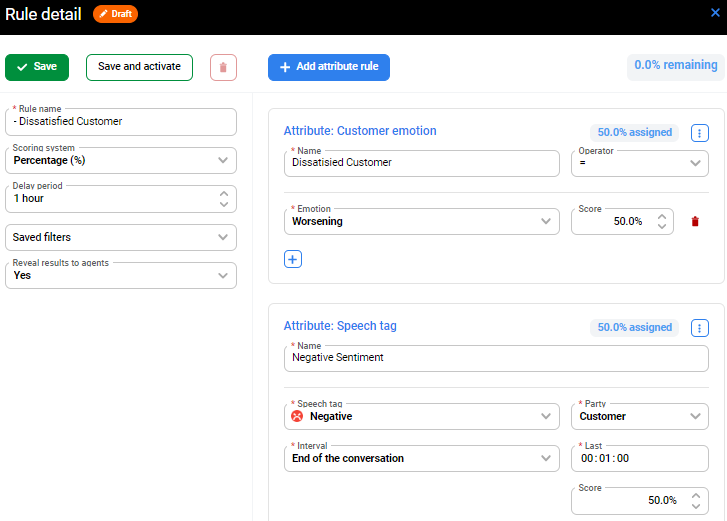

Create an Automated Rule

Users who have been assigned the permission AUTOMATED_RULES_MANAGE are able to add new rules from within the Automated Rules tab in Quality Management.

Rules are created using a combination of filters, attributes, and tags. Not all options can be combined when creating a rule, and not all options are mandatory.

To add/create a new Rule:

-

Click the Add new rule button

A menu will open on the right–hand side.

-

The Rule detail pane will display.

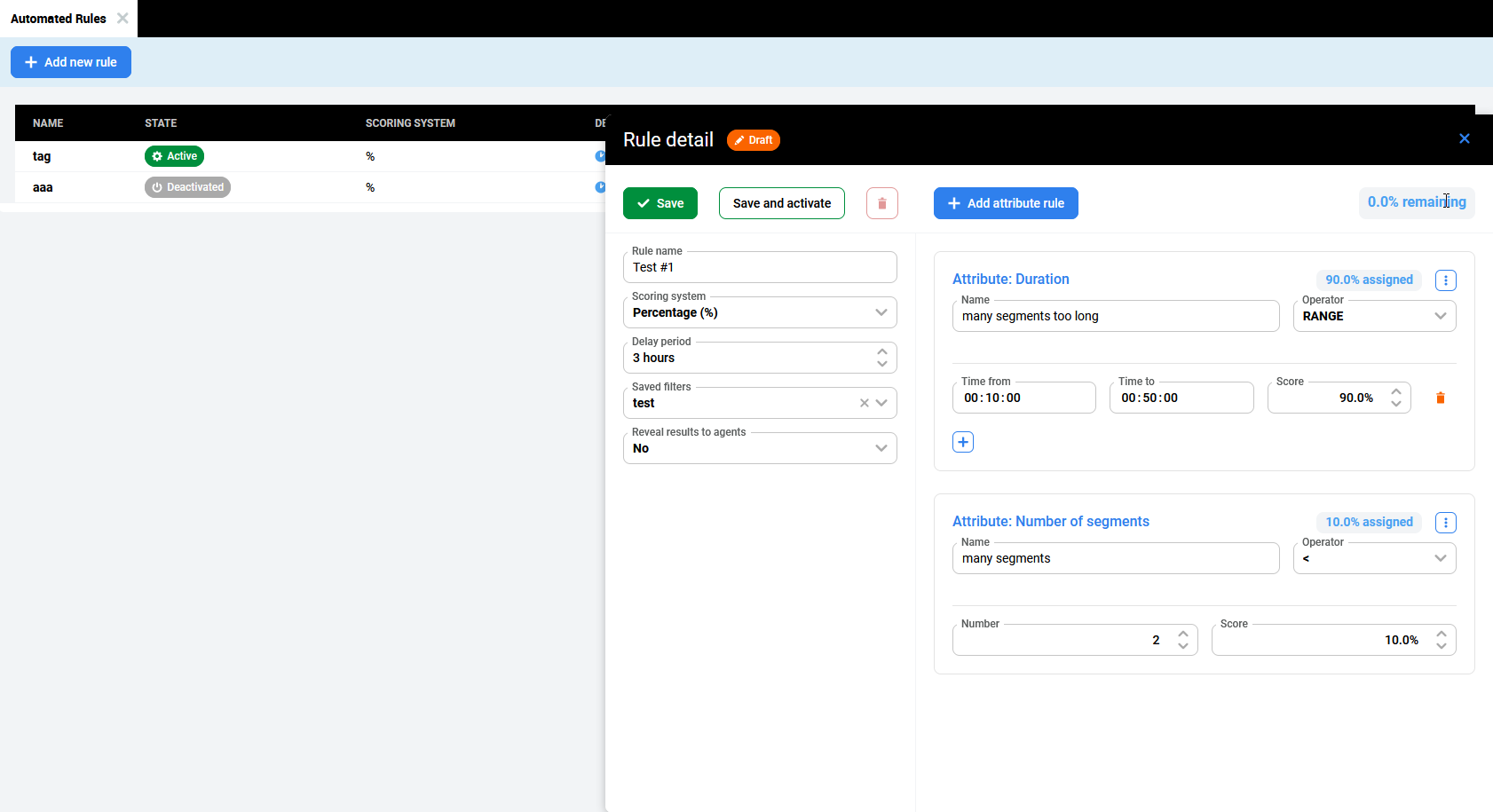

Fill inor Select the relevant fields:-

Rule name – Name the new rule (mandatory field)

-

Scoring system – Percentage (%)

-

Delay period – Defines how long the system waits before assigning a score to a conversation after it is imported (1 hour by default).

-

Saved filter(s) – Use a filter to limit the conversations to be assessed by the Automated Rule. Select one of the available options. Options vary based on your existing set of filters. If no filter is selected, this rule will assess all conversations. (Hint- Create a filter in the Conversation Explorer before creating an Advanced Rule)

-

Reveal results to agents – Select one of the options. (NOT CURRENTLY SUPPORTED All results are displayed to all users in this version. We are actively working to enable this feature.)

-

No (default)

-

Yes - Agents will see the result in the Conversation Explorer.

-

-

Add attribute rule(s):

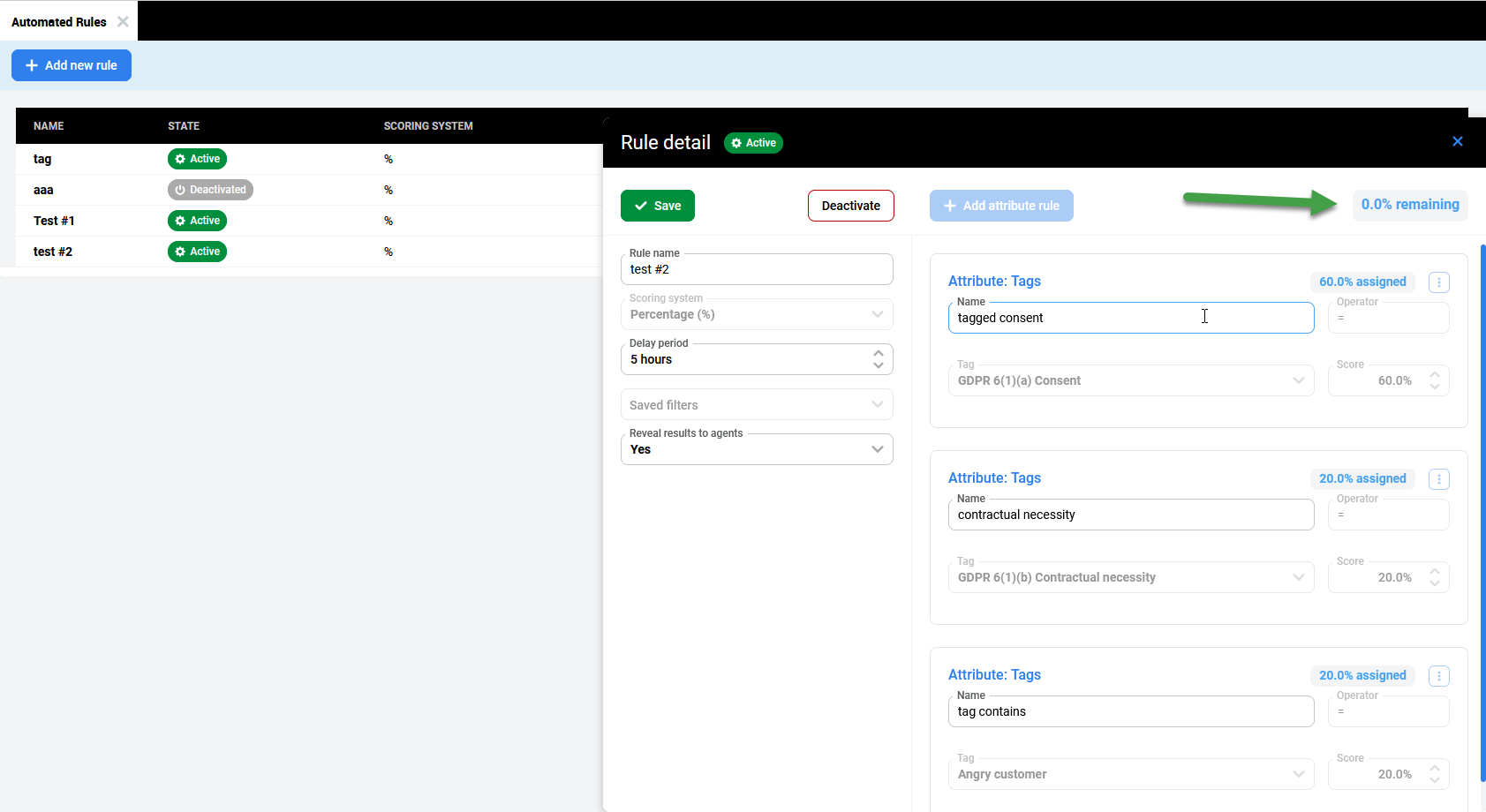

Select one of the available attribute types and fill in the available options. The Total Remaining Value (in the top right corner) indicates the total score that the rule will assign, based on your preferences. Add attribute rules until you have 100% assigned for each attribute.-

Agent average talk speed

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation-dependent.

-

Equals

-

Greater than

-

Less than

-

Greater or equal too

-

Less than or equal too

-

Range - Click the + sign to add a range. Complete both fields (Time from – Time to). The "From" value cannot be greater than "To" value. If

Rangeis selected, you can add multiple ranges (each can be assigned a % score)

-

-

Words/min – number of words per minute.

-

Score – number input as a percentage.

-

-

Agent crosstalk duration

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation-dependent.

-

Equals

-

Greater than

-

Less than

-

Greater or equal too

-

Less than or equal too

-

Range - Click the + sign to add a range. Complete both fields (Time from – Time to). The "From" value cannot be greater than "To" value. If

Rangeis selected, you can add multiple ranges (each can be assigned a % score)

-

-

Time – number input as hours, minutes and seconds.

-

Score – number input as a percentage.

-

-

Agent crosstalk ratio

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation-dependent.

-

Equals

-

Greater than

-

Less than

-

Greater or equal too

-

Less than or equal too

-

Range - Click the + sign to add a range. Complete both fields (Time from – Time to). The "From" value cannot be greater than "To" value. If

Rangeis selected, you can add multiple ranges (each can be assigned a % score)

-

-

Number – ratio of the call, in percent, where there is crosstalk

-

Score – number input as a percentage.

-

-

Agent emotion

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation-dependent.

-

Equals

-

-

Emotion – Select from one of the options (you may add several)

-

Score – number input as a percentage.

-

-

Agent number of interruptions

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation-dependent.

-

Equals

-

Greater than

-

Less than

-

Greater or equal too

-

Less than or equal too

-

Range - Click the + sign to add a range. Complete both fields (Time from – Time to). The "From" value cannot be greater than "To" value. If

Rangeis selected, you can add multiple ranges (each can be assigned a % score)

-

-

Number – number of times the agent interrupted the other party during in the conversation

-

Score – number input as a percentage.

-

-

Agent talking duration

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation-dependent.

-

Equals

-

Greater than

-

Less than

-

Greater or equal too

-

Less than or equal too

-

Range - Click the + sign to add a range. Complete both fields (Time from – Time to). The "From" value cannot be greater than "To" value. If

Rangeis selected, you can add multiple ranges (each can be assigned a % score)

-

-

Time – number input as hours, minutes and seconds.

-

Score – number input as a percentage.

-

-

Agent talking ratio

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation-dependent.

-

Equals

-

Greater than

-

Less than

-

Greater or equal too

-

Less than or equal too

-

Range - Click the + sign to add a range. Complete both fields (Time from – Time to). The "From" value cannot be greater than "To" value. If

Rangeis selected, you can add multiple ranges (each can be assigned a % score)

-

-

Number – ratio of the call, in percent, where the agent is speaking

-

Score – number input as a percentage.

-

-

Customer emotion

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation-dependent.

-

Equals

-

Greater than

-

Less than

-

Greater or equal too

-

Less than or equal too

-

-

Emotion – Select from one of the options (you may add several)

-

Score – number input as a percentage.

-

-

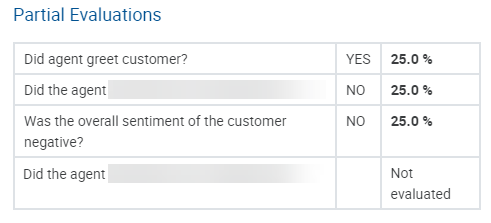

AI-based question

-

Question text – Provide a question for Generative AI to answer. The question should be specific. (mandatory field)

Create question prompts that are clear and well-defined. E.g. Did the agent greet the customer? Did the agent say good bye at the end of the call? Refer to the guidelines and recommendations in the next section. -

Predefined answers– select from the available options.

-

Yes/No

-

Yes/No/Not applicable – Use this option to include responses from the Generative AI engine that are inconclusive.

-

-

Score for each of the responses– number input as a percentage.

NOTE: If multiple questions are included in the Automated Rule and one of the questions cannot be processed by the server (for any reason) the result for the unsuccessful answer will display as "could not be evaluated" on the Conversation Explorer Details Pane. Other questions will still be processed and the results will display on the Conversation Explorer.

Example of a single question that is not processed by the server, while other are successfully processed.

-

-

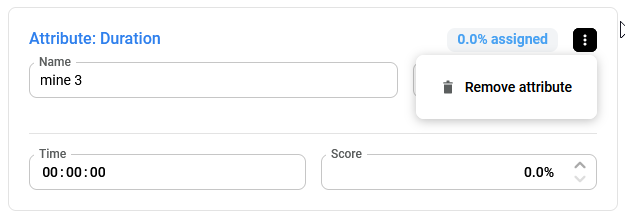

Duration

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation-dependent.

-

Equals

-

Greater than

-

Less than

-

Greater or equal too

-

Less than or equal too

-

Range - Click the + sign to add a range. Complete both fields (Time from – Time to). The "From" value cannot be greater than "To" value. If

Rangeis selected, you can add multiple ranges (each can be assigned a % score)

-

-

Time – number input as hours, minutes and seconds.

-

Score – number input as a percentage.

-

-

Number of segments

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation dependent.

-

Equals

-

Greater than

-

Less than

-

Greater or equal too

-

Less than or equal too

-

Range - Click the + sign to add a range. Complete both fields (Time from – Time to). The "From" value cannot be greater than "To" value. If

Rangeis selected, you can add multiple ranges (each can be assigned a % score)

-

-

Number – number of segments in the conversation

-

Score – number input as a percentage.

-

-

Number of agents

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – select from the available settings. The options available are installation-dependent.

-

Equals

-

Greater than

-

Less than

-

Greater or equal too

-

Less than or equal too

-

Range - Click the + sign to add a range. Complete both fields (Time from – Time to). The "From" value cannot be greater than "To" value. If

Rangeis selected, you can add multiple ranges (each can be assigned a % score)

-

-

Number – number of agents

-

Score – number input as a percentage.

-

-

Tags

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Operator – Equals (fixed setting, not possible to change)

-

Tag – Select from the dropdown list.

-

Score – number input as a percentage.

-

-

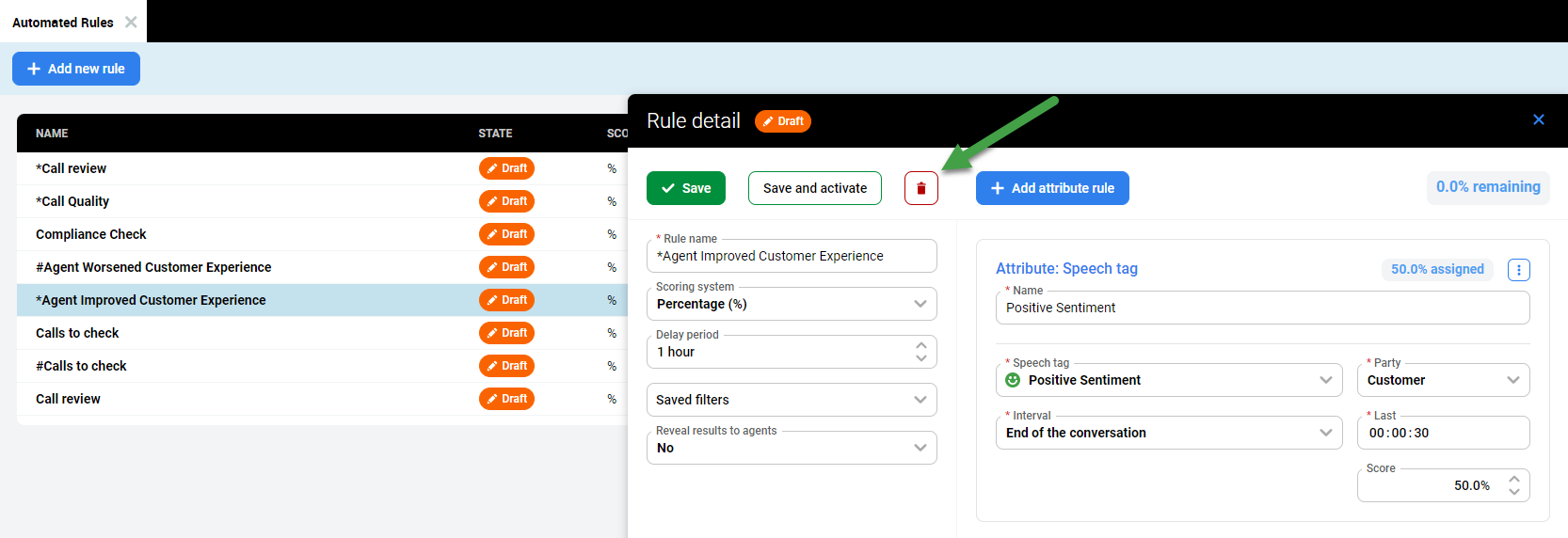

Speech tag

-

Name – Provide a descriptive name for the Attribute to be used (mandatory field)

-

Speech tag – Select from the dropdown list

-

Party– Select one of the available options: Agent, Customer, Any

-

Interval– Select when to look for the defined speech tag from the available options. Available options include: Beginning of the conversation, End of the conversation, During the entire conversation. Define the time frame to search for the speech tag. (e.g. If you chose to search in the beginning or end of the conversation, you must define a time frame to search within. This value is labeled as 'First' or 'Last'. A time frame must be defined. For example, Look for the speech tag Greeting within the first 15 seconds of the recording. In the input field labeled First enter the number 00:00:15)

-

Score – number input as a percentage.

-

-

-

-

Add rules until the Percentage assigned equals 0%, and the Save and activate button is active.

-

Click Save to save the rule as a Draft.

Click Save and activate to activate the rule. -

The new rule will be displayed in the list of all rules and is displayed as Active or Draft.

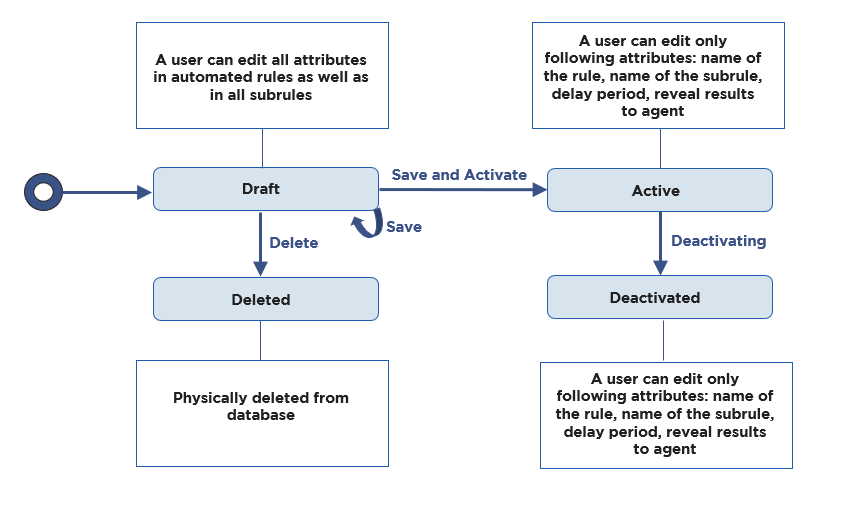

Rules in the state Draft are not active and can be edited before being activated. Refer to the graphical representation of the Automated Rule workflow in the section View Automated Rules for detailed information about editing rules at based on their 'state'.

NOTE:

-

When setting time parameters for

delayandperiod. Saved filters are defined based on UTC time, and are not shifted to the user timezone. Please take this into account when configuring the filters. -

Saved filters are not mandatory in the definition of the Automated Rule – If a Saved Filter is not specified in the Automated Rule, then all reviewable conversations with

type=callwill be evaluated.

To remove an attribute, select the three dots and click Remove attribute.

Guidelines for creating questions to use with AI evaluations:

It is recommended that you follow these guidelines when creating questions to use with AI evaluations in Automated Rules. It may be necessary to tweak questions after some time to ensure accuracy of the output.

-

Formulate questions that can be answered with a simple "yes" or "no".

-

Questions should focus on the agent's behavior, actions, and phrases that occurred during the call.

-

Ask questions that can be answered based on the transcription content and focus on observable behavior.

-

Ensure grammatical correctness and avoid typos.

-

Ask generic questions that can be applied to various call center conversations, rather than exceptional events what occurred in a single call.

-

Use clear and concise language.

-

Avoid asking multiple questions within one question. An improper question is: "Did the agent greet the customer, provide a solution, and set expectations?"

-

Use the following list of example questions for inspiration, they serve as a starting point for the creation of your own questions.

Example questions (generic):

-

Did the agent greet the customer in a professional manner?

-

Did the agent introduce themselves or the company?

-

Was the agent's communication clear and concise?

-

Did the agent remain calm and professional?

-

Did the agent provide a solution or resolution to the customer's issue?

-

Did the agent thank the customer for their time?

-

Did the agent invite the caller to take a post-call survey?

-

Did the agent explain the next steps needed?

-

Did the agent offer additional support or resources if needed?

-

Did the agent summarize the call outcome?

-

Did the agent identify and capitalize on sales opportunities or upselling chances?

-

Did the agent educate the customer on product/service features?

-

Did the agent inform the customer that the call will be recorded?

-

Did the agent adhere to regulatory compliance during the call?

Example questions related to FCA COCON rules/best practices (The Financial Conduct Authority Code of Conduct specific):

-

Did the agent act with integrity? (meaning he did not mislead a client, falsify documents, or mismark a trading position's value)

-

Did the agent act with due skill, care and diligence? (meaning he did not fail to explain all risks to customers or undertake transactions without a reasonable understanding of the risks)

-

Did the agent pay due regard to customer interests & treat them fairly? (meaning he did not recommend unsuitable products, withhold crucial information, or prioritize personal gain over the customer's best interests)

Modify Automated Rules

You may change any attribute/setting for those rules marked as Drafts. Rules with the status of Draft can also be deleted. Existing rules (marked as active) can only be Deactivated.

Editing existing rules is possible. You may rename an existing rule, rename a subrule, change the delay period, and reveal results to agents if the rule status is (Active, Disabled) but NOT modify the attribute rule(s).

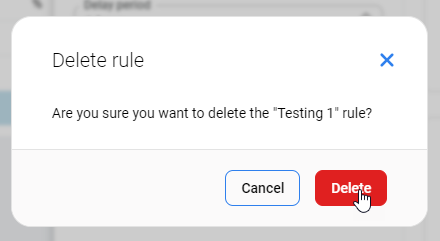

Deleting a Rule

Discard changes to an unsaved rule

When Editing a rule, you can discard your changes (by clicking on the x in the corner of the screen) A pop-up dialogue will ask you to confirm that you want to proceed. You can Cancel, Save Changes, and Return to editing, or Discard changes.

Delete a rule

To delete a draft, open the rule in edit mode.

Deletion of Active or Deactived rules is not supported. It is only possible to delete rules in the Draft state.

Click on the Red trash can symbol.

A pop-up dialogue will ask you to confirm that you want to proceed. You can cancel or delete the rule.

Deactivating a Rule

Deactivated rules cannot be activated again. After deactivation, it is possible to edit some values. These include name, delay period, and if visible to agent.

To deactivate a rule. Open the rule for editing, and select Deactivate. A prompt will ask you to confirm the action.

Limitations

-

The system does NOT take into account roles (Role-Based Access Control) or filters (Attribute-Based Accsess Control) assigned to the owner of the filter that is used for the automatic evaluations.

-

Conversations are NOT re–evaluated if a new segment is added to the conversation after the conversation has been evaluated by the current rule(see Delay period).

Processing related errors – Generative AI

If Speech Generative AI is installed and configured on your installation, then additional information will be available for Automated Rules. In some cases, the Generative AI server may not respond with consistent data or may respond with an error message. The following logic is applied in the case of error messages being sent back by the Generative AI server:

-

Error 500/422 – Cannot be parsed. Users will see the message "could not be evaluated" in the UI. Other subrules will be evaluated as expected.

-

Error 422 – No transcription. The system will retry three (3) times. If no proper response is received users will see the message "could not be evaluated" in the UI. Other subrules will be evaluated as expected.

Examples of Complex Automated Rules

Automated Rules can be as simple or complex as you like. You may create a single rule that assigns 100% of the value to a single attribute, or you may create a complex rule that factors in multiple attributes.

Example 1 – Combine various attributes:

Here is an example of a complex rule that combines various attributes.

|

Attribute |

Operator |

Configuration of the rule |

Values |

Max |

Explanation of why this combination was chosen |

|---|---|---|---|---|---|

|

Duration |

Range |

0-1 min |

10 |

10 |

Duration is for Contact Centers that emphasize adherence to a specific duration of a call. |

|

1-2 min |

7.5 |

|

|||

|

2-3 min |

5 |

|

|||

|

Tags |

"=" |

Happy customer |

10 |

10 |

Tags are important to Contact Centers that leverage call tagging during the recording of calls (auto tagging). |

|

Customer emotion |

"=" |

Positive |

15 |

15 |

Focus on customer emotion - all agents within the conversation will be evaluated for emotion. |

|

Improving |

10 |

|

|||

|

Neutral |

5 |

|

|||

|

Agent emotion |

"=" |

Positive |

7.5 |

7.5 |

Every agent benefits from having a positive, improving, or neutral emotion. Worsening and Negative is not acceptable. |

|

Improving |

5 |

|

|||

|

Neutral |

2.5 |

|

|||

|

Agent talking ratio |

< |

65% |

10 |

10 |

For Contact Centers that want to keep in check the ratio of agent talk time (agent should not be talking more than XY percent of the time) |

|

Agent average speed |

Range |

0-100 |

0 |

15 |

Very slow speech might be monotonous and customers may get bored. |

|

100-200 |

15 |

|

Very quick speech might not be understandable to all customers and therefore it is important that the agent speaks at a reasonable pace. |

||

|

200-250 |

10 |

|

|||

|

>250 |

0 |

|

|||

|

Agent number of interruptions |

Range |

0-1 |

15 |

15 |

The agent should not be interrupting the customer. Agents who do not interrupt customers more than twice should receive a more favorable score as a result. |

|

2 |

10 |

|

|||

|

>2 |

0 |

|

|||

|

Agent crosstalk duration |

Range |

<3 seconds |

12.5 |

12.5 |

Crosstalk duration can be indicative of a call not going well. Agents should avoid crosstalk. |

|

3 - 5 seconds |

7.5 |

|

|||

|

>5 seconds |

0 |

|

|||

|

Speech Tags |

"=" Beginning, 15 seconds. |

Greetings Party = Agent |

5 |

5 |

|

|

SUM |

100 |

|

|||

Impact of attributes

NOTE: Some attributes impact all agents (customer emotion, conversation attributes) while others are agent-specific (Any prefixed Agent....).

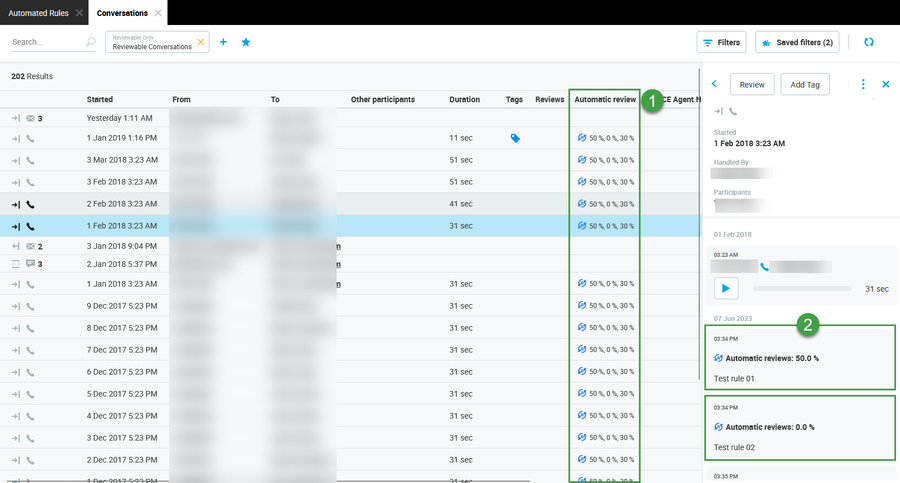

Viewing Results

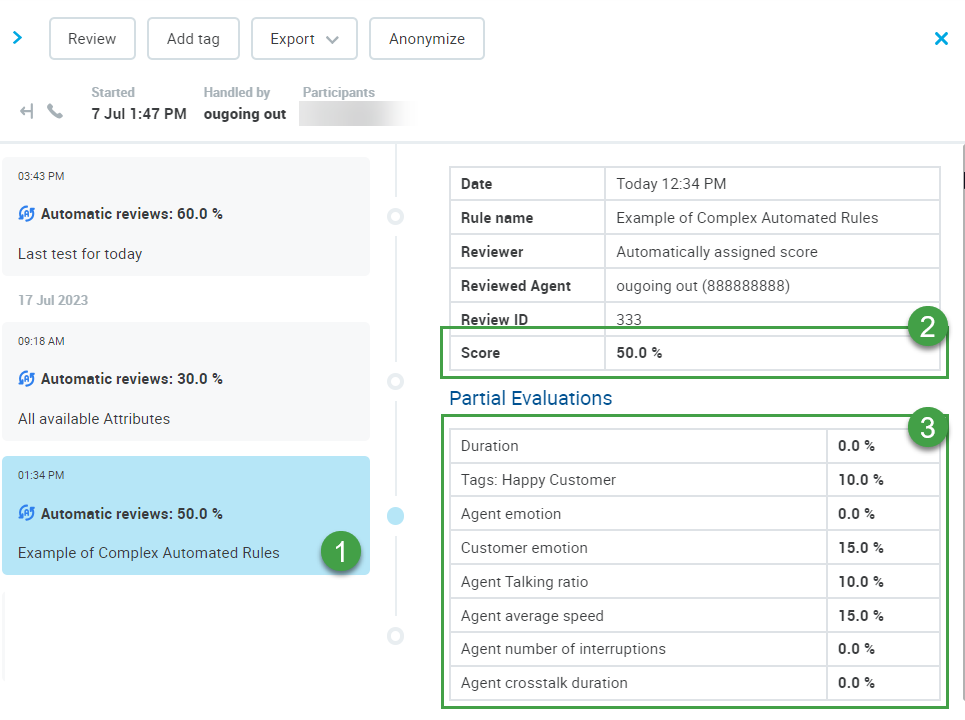

The result of the Automated Rules scoring is displayed within the Conversation Explorer.

Open the Details Pane and click on an Automatic reviews bubble to view the details (including the score #2) and a detailed breakdown of the Partial Evaluation #3. The Partial Evaluation table shows how the final score is calculated. A list of all attributes defined by the Automated Rule and their score are displayed.

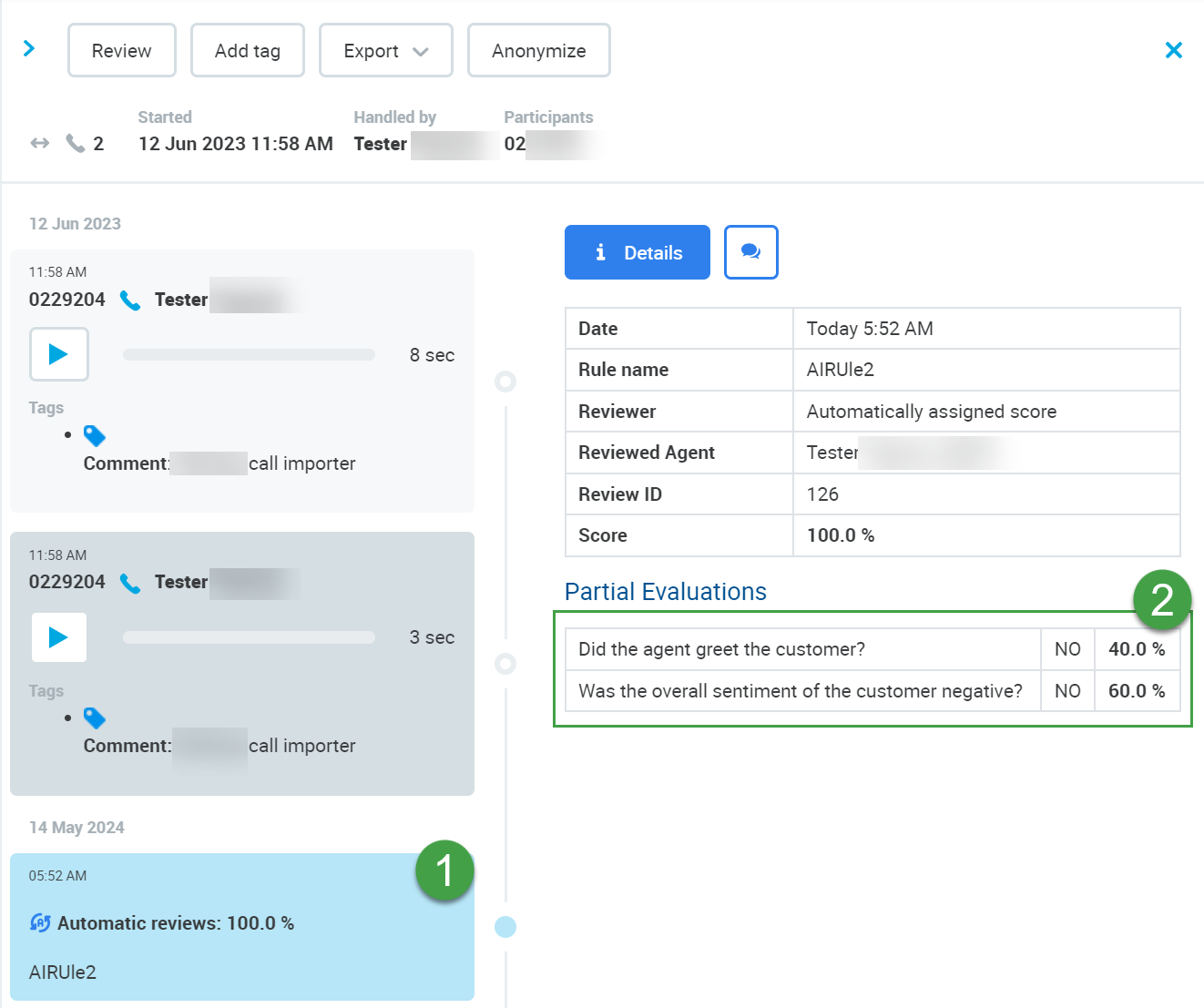

If Speech Generative AI is installed and used as part of an automated rule the results are displayed in the Details Pane like other automated rules. The name of the rule is shown in the Details Pane (#1) the questions and responses are shown along with the results(#2) under Partial Evaluations.

What is shown under Partial Evaluations if Speech Generative AI is deployed?

-

The question used in the Automated Rule – Text only

-

The result provided by the Speech Generative AI server – Possible responses include

YES/NO/N/A -

Calculated score – This is the score assigned based on your configured automated rule for each question

Trainers Tips

AQM Rule Best Practices

Automated Rules are designed to equal 100%. This is true whether the rule consists of a single attribute equaling 100%, or if there are multiple attributes that add up to 100%.

At this time, it is not possible to decrease the score based on an attribute, but rather only award a score based on the presence of the attribute. It's important to understand what a score of 100% means for each individual rule. Consider a naming convention that readily identifies 100% as a positive or negative outcome, using symbols to highlight this outcome.

Create a set of rules that search for either a positive outcome or its opposite.

How do I identify Positive vs Negative?

Begin the Rule Name with a simple identifier:

Positive Rule Name begin with + [plus sign]

Negative Rule Name being with - [minus/negative sign]

Positive Set of Rules:

Negative Set of Rules:

In this way, regardless of the name of the rule, the initial indicator (+ or -) shows whether a high score for this particular rule is good or bad.

Neutral Set of Rules:

In some cases, you may consider a third indicator (Neutral). For example, if there are rules you create that have neither a positive nor negative connotation but rather are simply being used to track the occurrence of some attribute.